MCP Server

Windsurf

Introduction

Cascade is an AI coding assistant in the Windsurf Editor that can connect various Large Language Models (LLM) to the QuantConnect MCP Server. This page explains how to set up and use the server in Windsurf.

Getting Started

To connect the Cascade agent in the Windsurf Editor to the QC MCP Server, follow these steps:

- Install and open Docker Desktop.

- Install and open the Windsurf Editor.

- Download the QuantConnect extension.

- In Windsurf, press Ctrl+Shift+X to open the Extensions panel.

- Drag-and-drop the vsix file you downloaded onto the Extensions panel.

- In the top navigation menu, click .

- On the Windsurf Settings page, in the Cascade section, click .

- On the Manage MCP Servers page, click .

- Edit the mcp_config.json file that opens to include the following QuantConnect configuration:

- Press Ctrl+S to save the mcp_config.json file.

- Press Ctrl+L to open Cascade and begin interacting with the agent.

The Windsurf IDE has a lag in updating the VS Code extensions. Click the preceeding link to download the extension directly from the marketplace. The download should automatically start.

{

"mcpServers": {

"quantconnect": {

"command": "docker",

"args": [

"run", "-i", "--rm",

"-e", "QUANTCONNECT_USER_ID",

"-e", "QUANTCONNECT_API_TOKEN",

"-e", "AGENT_NAME",

"--platform", "<your_platform>",

"quantconnect/mcp-server"

],

"env": {

"QUANTCONNECT_USER_ID": "<your_user_id>",

"QUANTCONNECT_API_TOKEN": "<your_api_token>",

"AGENT_NAME": "MCP Server"

}

}

}

}

To get your user Id and API token, see Request API Token.

Our MCP server is multi-platform capable. The options are linux/amd64 for Intel/AMD chips and linux/arm64 for ARM chips (for example, Apple's M-series chips).

If you simultaneously run multiple agents, set a unique value for the AGENT_NAME environment variable for each agent to keep record of the request source.

To keep the Docker image up-to-date, in a terminal, pull the latest MCP server from Docker Hub.

$ docker pull quantconnect/mcp-server

If you have an ARM chip, add the --platform linux/arm64 option.

Models

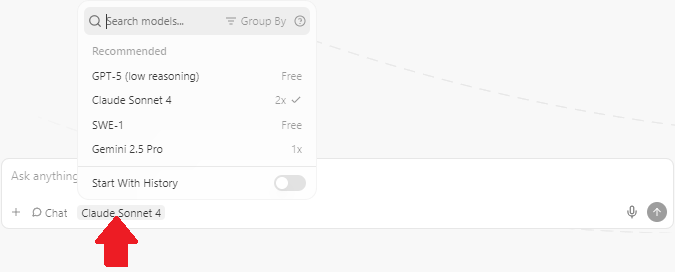

Windsurf supports several LLMs that you can use in the Cascade panel, including GPT, Claude, and Gemini. To change the model, click the model name at the bottom of the Cascade panel and then click the name of the model to use.

To view all the available models, see Models in the Windsurf documentation.

Quotas

There are no quotas on the QuantConnect API, but Windsurf Cascade and the LLMs have some. To view the quotas, see Plans and Credit Usage in the Windsurf documentation and see the quotas of the model you use.

Troubleshooting

The following sections explain some issues you may encounter and how to resolve them.

Connection Error Code -32000

The docker run ... command in the configuration file also accepts a --name option, which sets the name of the Docker container when the MCP Server starts running.

If your computer tries to start up two MCP Server containers with the same name, this error occurs.

To avoid the error, remove the --name option and its value from the configuration file.

For an example of a working configuration file, see Getting Started.

Service Outages

The MCP server relies on the QuantConnect API and the client application. To check the status of the QuantConnect API, see our Status page. To check the status of Windsurf, see the Windsurf Status page. To check the status of the LLM, see its status page. For example, Claude users can see the Anthropic Status page.